🤖 AI Expert Verdict

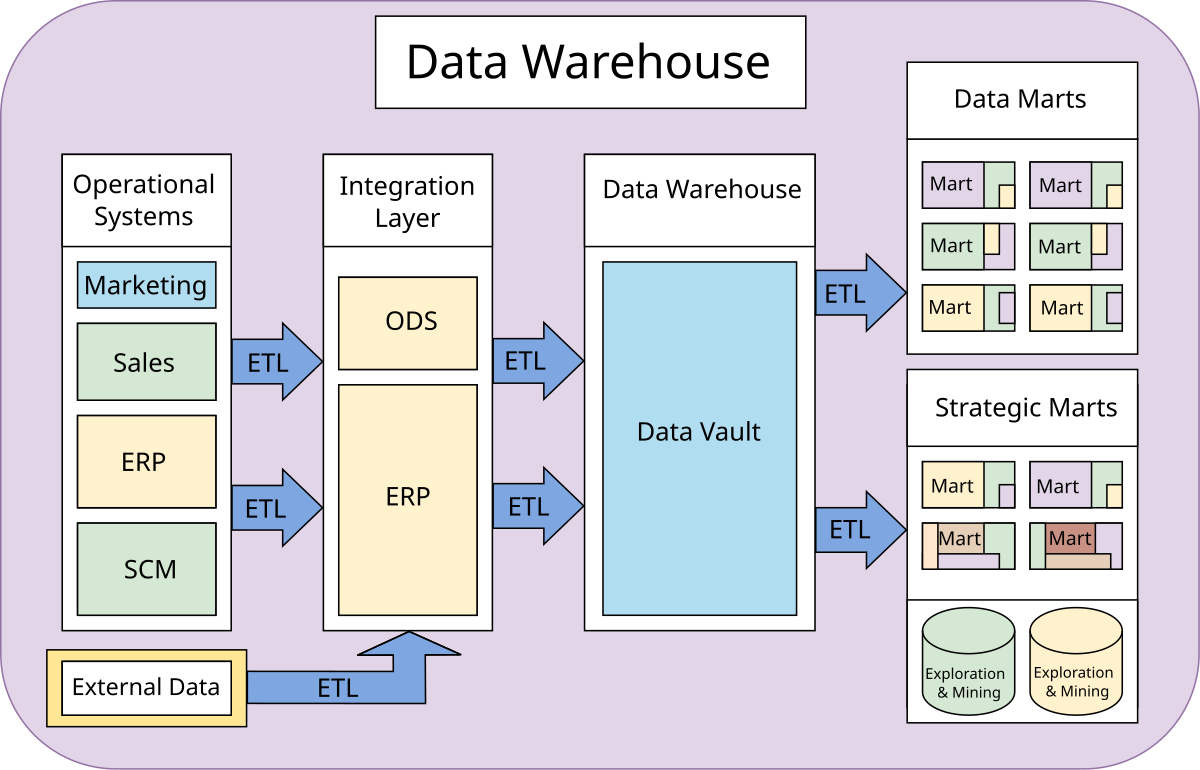

A data warehouse (DW) is a central repository for integrated historical and current data, optimized specifically for reporting, analysis, and supporting business intelligence decisions. DWs use workflows like Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT) to move data from operational systems into the warehouse where it is structured using dimensional or normalized models for analytical access.

- It optimizes data for quick analytical access.

- It supports historical data storage for trend analysis.

- It centralizes data from many disparate sources.

- It significantly reduces data redundancy costs.

A Data Warehouse (DW) is a vital system in computing. It supports reporting and data analysis. This makes it a core part of business intelligence (BI). DWs act as central storage areas. They integrate data from many different sources. These systems organize current and historical data for optimal analysis. Analysts and managers use DWs. They use the insights generated to make crucial organizational decisions.

How Data Warehouses Handle Data

Data gets uploaded from operational systems, like sales or marketing platforms. The data often moves through an operational data store first. Data cleansing is necessary to ensure high quality before it enters the DW. We primarily use two main methods to build a DW system. These methods are Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT). ETL requires data transformation before loading. ELT loads the raw data directly first. Then it handles all necessary transformations inside the data warehouse itself. This is often done using a staging area within the DW. You can learn more about specialized tools on our website. Shop Our Products now.Databases for Different Goals

Operational databases focus on speed and data integrity. They use database normalization. This design keeps data accurate. However, one transaction might spread information across many tables. This design allows for very fast updates. Data warehouses, however, optimize for analytical access. They select specific fields instead of loading all data.[adrotate group=”1″]

Understanding OLAP and OLTP

We use different database management systems for these two types of processing. Online Transaction Processing (OLTP) handles many short online transactions. Examples include INSERTs, UPDATEs, and DELETEs. OLTP emphasizes fast query processing. It maintains detailed and current data. Performance focuses on transactions per second. Online Analytical Processing (OLAP) uses complex queries. These queries involve data aggregations. OLAP systems store aggregated, historical data. They often use multi-dimensional schemas like star schemas. OLAP is excellent for data mining. Response time measures OLAP performance.Modeling Your Data Warehouse

You have two important approaches for storing DW data: dimensional and normalized.1. Dimensional Approach

Ralph Kimball proposed the star schema. This approach partitions transaction data into “facts” and “dimensions.” Facts are numeric measurements, like total price paid. Dimensions provide context, such as customer name or order date. This structure makes data easier to understand. It also speeds up data retrieval. However, adding new, unstructured data can be challenging.2. Normalized Approach (3NF)

Bill Inmon proposed this entity-relational model. Data follows database normalization rules. Normalized tables group into subject areas. Examples include customers or finance. This approach makes adding new information straightforward. However, it involves many tables. Users may find it hard to join data without deep structural knowledge. Both models use joined relational tables. They only differ in their degree of normalization. The best choice depends on your specific business problem. We often publish guides to help you make these choices. Read Our Blog for the latest insights.A Brief History

The idea of data warehousing began in the late 1980s. IBM researchers Barry Devlin and Paul Murphy developed the “business data warehouse.” They wanted an architectural model for data flowing to decision support environments. Before this, companies wasted money on massive data redundancy. Multiple decision support systems often needed the exact same stored data. Early DW concepts aimed to reduce these high costs. They provided a centralized, efficient way to manage historical business data.Facts and Aggregations

A fact is a value or measurement within the managed system. Raw facts come directly from the reporting entity. For instance, a cell tower reports channel allocation requests. Aggregated facts, or summaries, roll up raw data. If a city has three towers, you aggregate the requests to the city level. This process helps extract more relevant business information.Reference: Inspired by content from https://en.wikipedia.org/wiki/Data_warehouse.